Growth Acceleration Partners | June 19, 2025

IT Outsourcing: 8 Trends Tech Leaders Need to Know in 2025

Cloud-native platforms, machine learning, edge computing — the digital landscape is evolving at breakneck speed. But the ability to innovate doesn’t just depend on vision anymore — it hinges on access to the right talent, the right tools, and the right partners. That’s why IT outsourcing has transformed. It’s no longer a reactive move to […]

Read More

Growth Acceleration Partners | June 18, 2025

What Is Technical Debt in AI-Generated Codes & How to Manage It

Artificial intelligence is now a part of every software development team’s workflow. Developers claim it improves their productivity, but at what cost? GitClear’s latest research on AI-generated code quality showed that coding with AI is increasing code duplication and reducing the quality of development. The report also emphasized that it increases the rate at which […]

Read More

Growth Acceleration Partners | June 18, 2025

Data Science Applications in Retail: Examples, Benefits, and Challenges

Your competitors probably know more about your customers than you do, and they are using that information to win fiercely. If not, how else would retail stores like Target, DSW, and Macy’s have predicted the need for more physical stores even after the COVID-19 pandemic? The fact is, they had access to the right data, […]

Read More

Growth Acceleration Partners | June 2, 2025

Building Your AI Future: How GAP Tackles Your Most Critical Software, Data Engineering and Staffing Challenges to Actually Make AI Work

By Jocelyn Sexton — VP of Marketing at Growth Acceleration Partners A year or so ago, it was totally appropriate to ask a CTO, “Is AI factoring into your technology roadmap?” But ask an innovation leader that question today, and you’ll see uninformed or even insulting. Oh how quickly things change! AI is now an […]

Read More

Growth Acceleration Partners | May 27, 2025

Use Cases of Generative AI in Ecommerce

You didn’t start an e-commerce business to become a copywriter, marketer, or ad specialist. Just like every other business owner, you started it to sell products and grow revenue. But today, that’s not enough. Running a successful online store demands high-quality content, fast customer service, smart targeting, and constant adaptation to changing customer expectations. It’s […]

Read More

Growth Acceleration Partners | May 20, 2025

Strategic Synergy: How Thinktiv and GAP Accelerate Growth for PE Firms and Their Portfolio Companies

By Jocelyn Sexton — VP of Marketing at Growth Acceleration Partners In the fast-paced world of private equity, value creation is a race against the clock. PE firms have a limited window — often just three to four years — to maximize the growth and exit potential of their portfolio companies. This urgency demands precision, strategy […]

Read More

Growth Acceleration Partners | May 13, 2025

Microsoft Solutions Partner Benefits: Access Expertise & Resources with GAP

Experience only matters if you keep sharpening it.. At GAP, "good enough" makes us break out in hives, so we relentlessly and methodically stack up new Microsoft certifications on top of our long-standing Solutions Partner status. Why? Because tech (and you and your business) don't stand still - and neither do we. What's New? More […]

Read More

Growth Acceleration Partners | May 13, 2025

AI Workshops: Driving Practical, Actionable Results

By now, the business case for AI adoption is clear. You’ve read the think pieces, tracked the developments, and seen the impact of AI technology in your industry — perhaps even your organization. You can be sure your competition has, too. That’s why planning the next step in your AI journey is so important. However, […]

Read More

Growth Acceleration Partners | May 13, 2025

From Data Pipeline to AI-Automated Insights Engine

It’s a fact – modern business runs on data. Organizations from manufacturing to marketing — and fintech to healthcare — depend on data pipelines to power core functions from customer service to boardroom strategy. But as business requirements grow more complex, data volumes explode. And traditional approaches to building and managing data pipelines struggle to […]

Read More

Growth Acceleration Partners | May 13, 2025

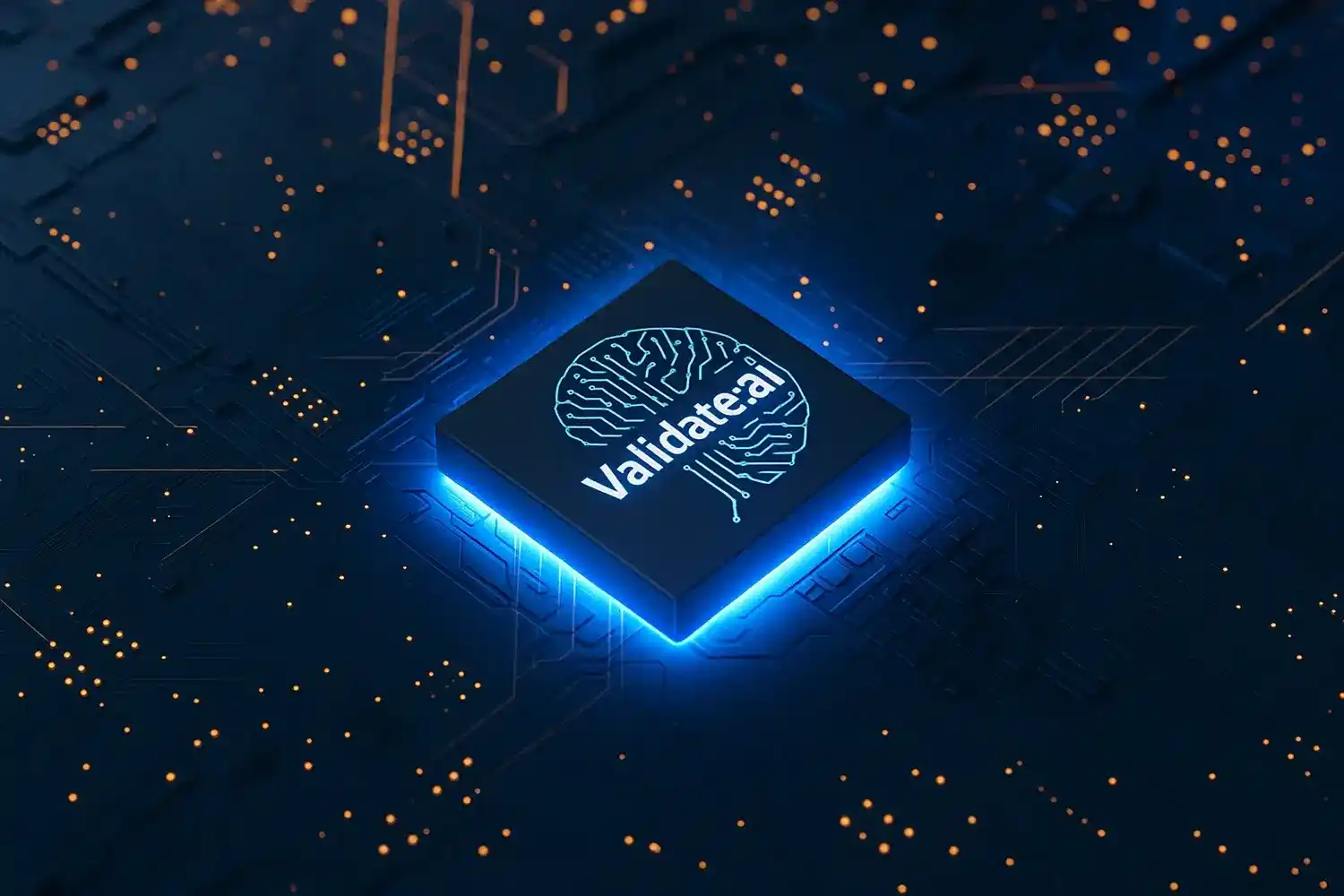

Validate:AI, Verify AI Innovations Before You Scale

Decision makers, get ready. AI will play an increasingly critical role in businesses of all sizes, so adopting an innovation mindset now will help your organization adapt quickly, differentiate itself from the competition and drive sustainable, long-term growth. Deciding which innovation mindset is right for your business, though, is a challenge. “Move fast and break […]

Read More